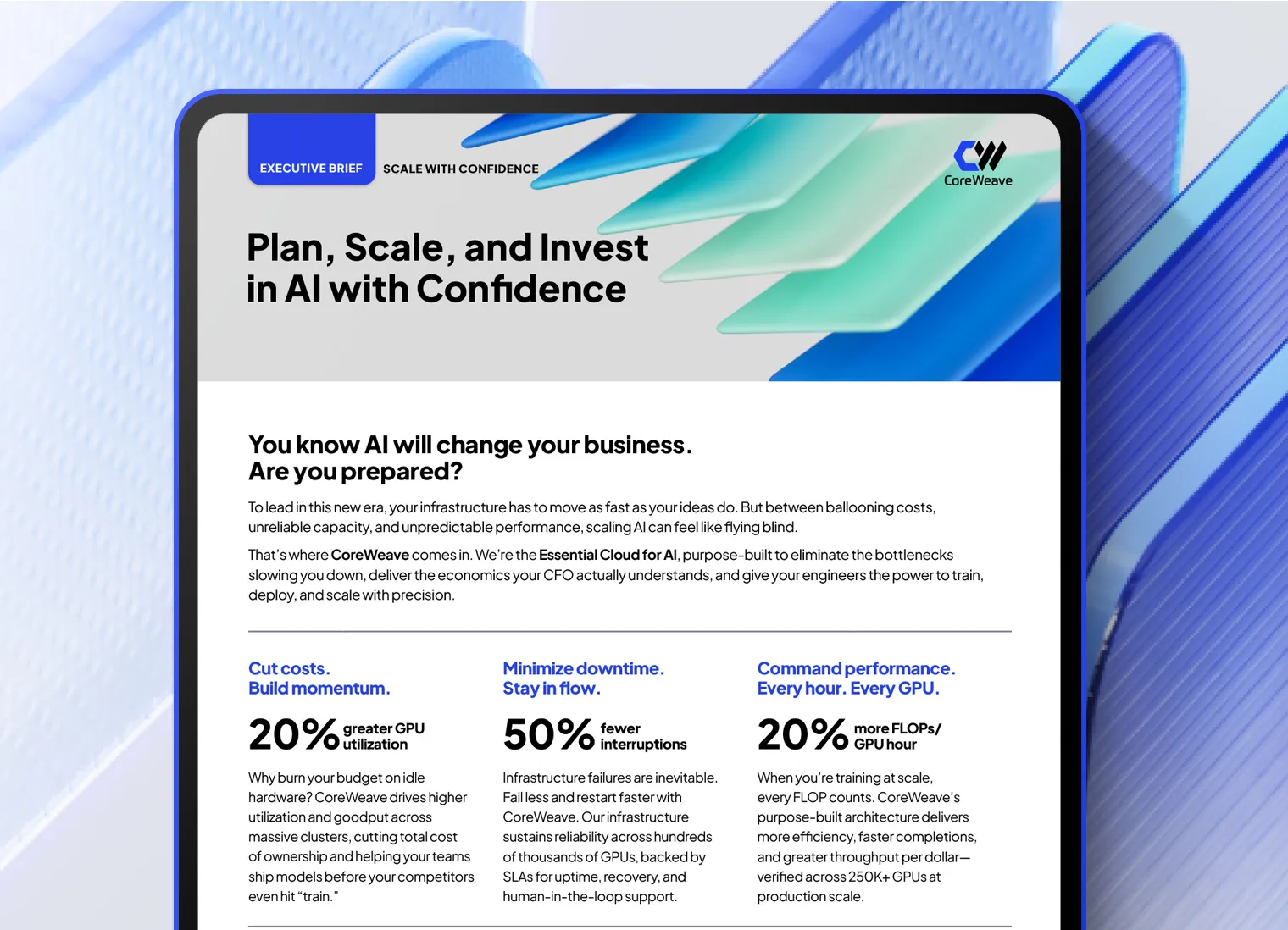

Achieve 20% greater performance and 10x reliability from your AI infrastructure

Stop cycling valuable time and money on idle infrastructure. Start driving measurable results that unlock faster time-to-market, lower TCO, and greater stability for your large-scale training runs.

This solution brief, Scale AI Training Without Slowdowns, provides a concise summary of CoreWeave’s results from benchmarking a 70B-parameter model on 1,024 NVIDIA H100 GPUs. You’ll learn what’s really causing downtime at scale, how you can overcome the industry’s most persistent AI bottlenecks, and why AI innovators around the world count on CoreWeave for uncompromising performance, scale, and reliability.

- Eliminate wasted GPU cycles to maximize every training dollar

- Achieve predictable schedules with 10× fewer job interruptions

- Launch models 7–15 days faster, with confidence