What is a GPU and its role in AI?

Artificial intelligence (AI) is rapidly changing the world, assisting in everything from data security to medical diagnosis systems. Behind this AI revolution is an important piece of hardware: the Graphics Processing Unit or GPU. Originally created for graphics rendering, GPUs have become increasingly important for AI, enabling the training and deployment of complex AI models that were once impossible to imagine.

What is a GPU?

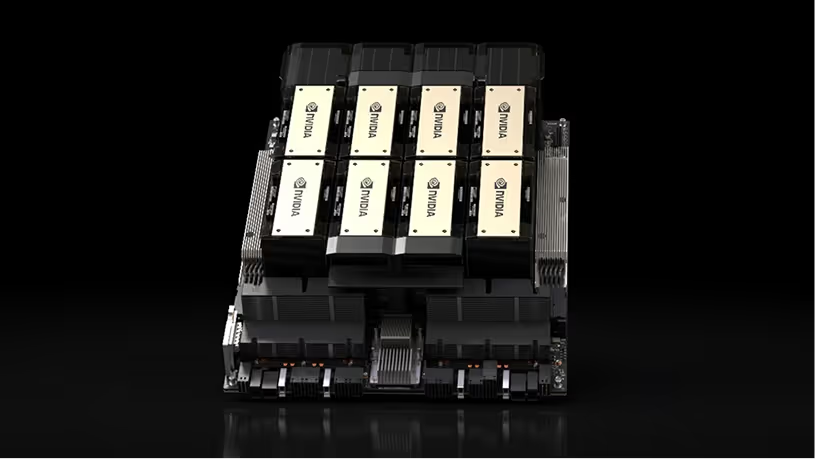

A GPU, short for Graphics Processing Unit, is a specialized electronic circuit originally designed to speed up the creation of images and videos. However, its remarkable ability to perform vast numbers of calculations rapidly has led to its adoption in diverse fields, including artificial intelligence and scientific computing, where it excels at handling data-intensive and computationally demanding tasks.

GPUs are built upon a silicon wafer, a thin, circular slice of highly purified silicon. This wafer serves as the base upon which billions of microscopic transistors are etched. These transistors act as miniature gates controlling the flow of electricity, forming the building blocks of the GPU's logic circuits. A complex network of microscopic metal wires, called interconnects, link these transistors, enabling them to communicate and perform calculations. Finally, the entire assembly is encased in a protective package made of materials like plastic, ceramic, and metal to protect the delicate circuitry and help dissipate heat generated during operation.

GPU vs CPU

GPUs, with their specialized architecture, occupy a unique middle ground between the general-purpose CPUs.

While CPUs, or Central Processing Units, excel at sequential processing, handling tasks one instruction at a time, GPUs are designed for processing multiple tasks at the same time. In addition, CPUs are often low cost and great for some AI workloads like inference, while GPUs are a great choice for training or running highly complex models.

GPUs can handle a broader range of algorithms and tasks. This makes GPUs more versatile for researchers and developers who may need to experiment with different AI approaches or use cases such as deep learning.

Both GPUs and CPUs are options available as part of an AI Hypercomputer architecture.

How GPUs work

GPUs work by performing a large number of operations at the same time. They achieve this by having a large number of processing cores that can work on different parts of a task simultaneously. This parallel processing architecture allows GPUs to handle tasks that would take CPUs much longer to complete.

Imagine a task that can be broken down into thousands of smaller, independent steps. A GPU can distribute these steps across its many cores, allowing them to be computed concurrently. This multi-processing capability is what gives GPUs their significant advantage over CPUs, especially in areas like image and video processing, scientific simulations, and, notably, machine learning, where large datasets and complex algorithms are the norm.

Frequently asked questions

What’s the difference between a CPU and a GPU when it comes to AI processing?

A CPU (Central Processing Unit) is designed for general-purpose tasks and excels at handling a few tasks at a time with high flexibility. A GPU (Graphics Processing Unit), on the other hand, is built for parallel processing, making it ideal for handling thousands of operations simultaneously — a key advantage in AI workloads like neural network training and inference.

Why are GPUs preferred over CPUs for training AI models?

GPUs are optimized for theparallel matrix and vector operationsthat underpin deep learning. This allows them to process large volumes of data much faster than CPUs. For tasks like training large language models or image recognition systems, a GPU can significantly reduce training time from weeks to days or even hours.

Can AI models run on CPUs, or do they require GPUs?

Yes, AI modelscan run on CPUs, especially during inference (when making predictions with a trained model). However, for large models or real-time performance, GPUs are often preferred due to their speed. CPUs are still useful for lightweight models, mobile applications, or environments where GPUs aren’t available.

Are there other processors used in AI besides CPUs and GPUs?

Yes. In addition to CPUs and GPUs,TPUs (Tensor Processing Units)andNPUs (Neural Processing Units)are specialized processors designed specifically for AI tasks. TPUs, developed by Google, are optimized for TensorFlow and high-performance AI computations, while NPUs are commonly used in mobile devices for on-device AI.

How GPUs run AI workloads

GPUs have become the workhorse of modern artificial intelligence, enabling the training and deployment of complex AI models that power everything from image recognition to natural language processing. Their ability to perform massive numbers of calculations simultaneously makes them well-suited for the computationally demanding tasks at the heart of AI. GPUs accelerate the training of AI models, enabling researchers and developers to iterate on models more quickly and unlock breakthroughs in AI capabilitie

Training AI models

GPUs are used to train AI models by performing the complex mathematical operations that are required to adjust the model's parameters. The training process involves feeding the model large amounts of data and then adjusting the model's parameters to minimize the error between the model's predictions and the actual data. GPUs can accelerate this process by performing multiple calculations simultaneously.

Running AI models

Once an AI model is trained, it needs to be executed, often in real-time, to make predictions on new data. GPUs play a critical role in this inference phase as well. Their ability to rapidly execute the complex calculations required to make predictions, enables AI-powered applications to respond to user requests quickly and efficiently. Whether it's a self-driving car making split-second decisions or a chatbot providing instant responses, GPUs are essential for unlocking the real-time capabilities of AI models.

Why are GPUs important for AI?

GPUs are important for AI because they can accelerate the training and inference processes. This allows AI models to be developed and deployed more quickly and efficiently than using CPUs. As AI models become more complex, the need for GPUs will only increase

Which GPUs are best for AI?

The best GPU for AI will depend on the specific task at hand. For example, a GPU with a large amount of memory may be better suited for inferencing large AI models, while a GPU with a high clock speed may be better suited for low latency inference serving.

Ready to take the next steps?

A quick chat with a specialist can help you choose the best solution to save time, reduce costs, and boost performance.