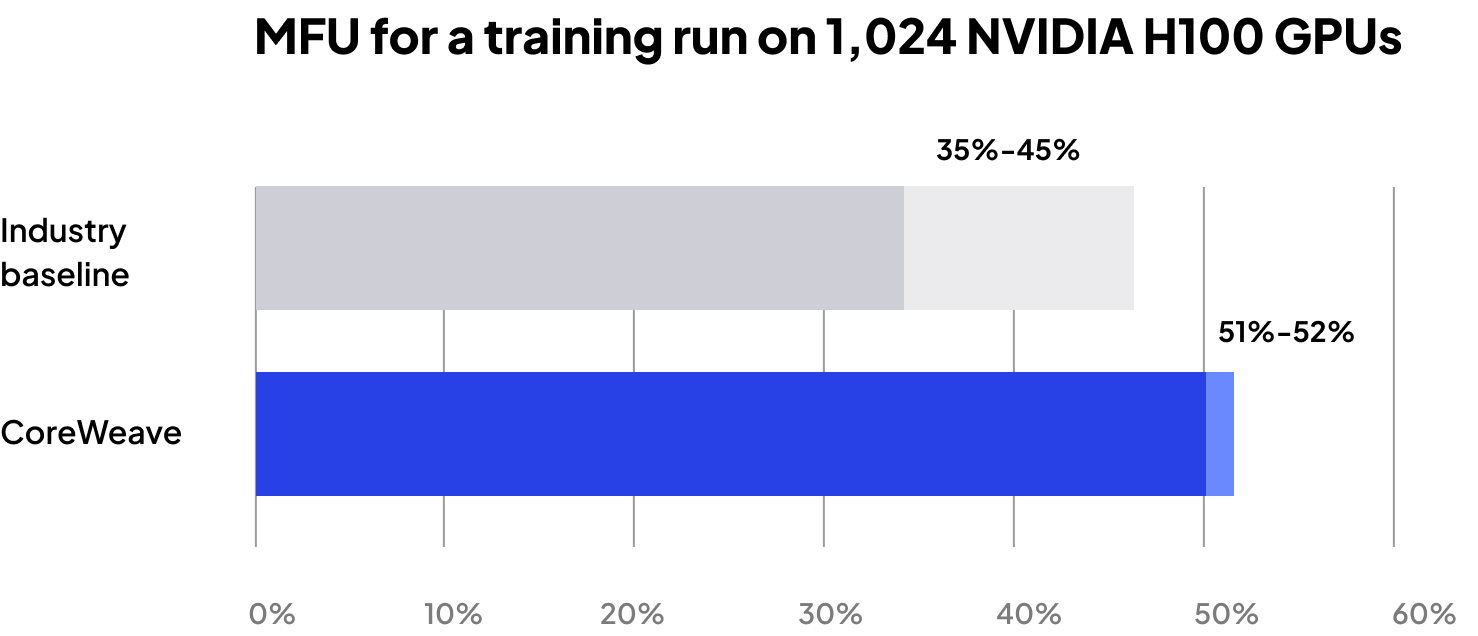

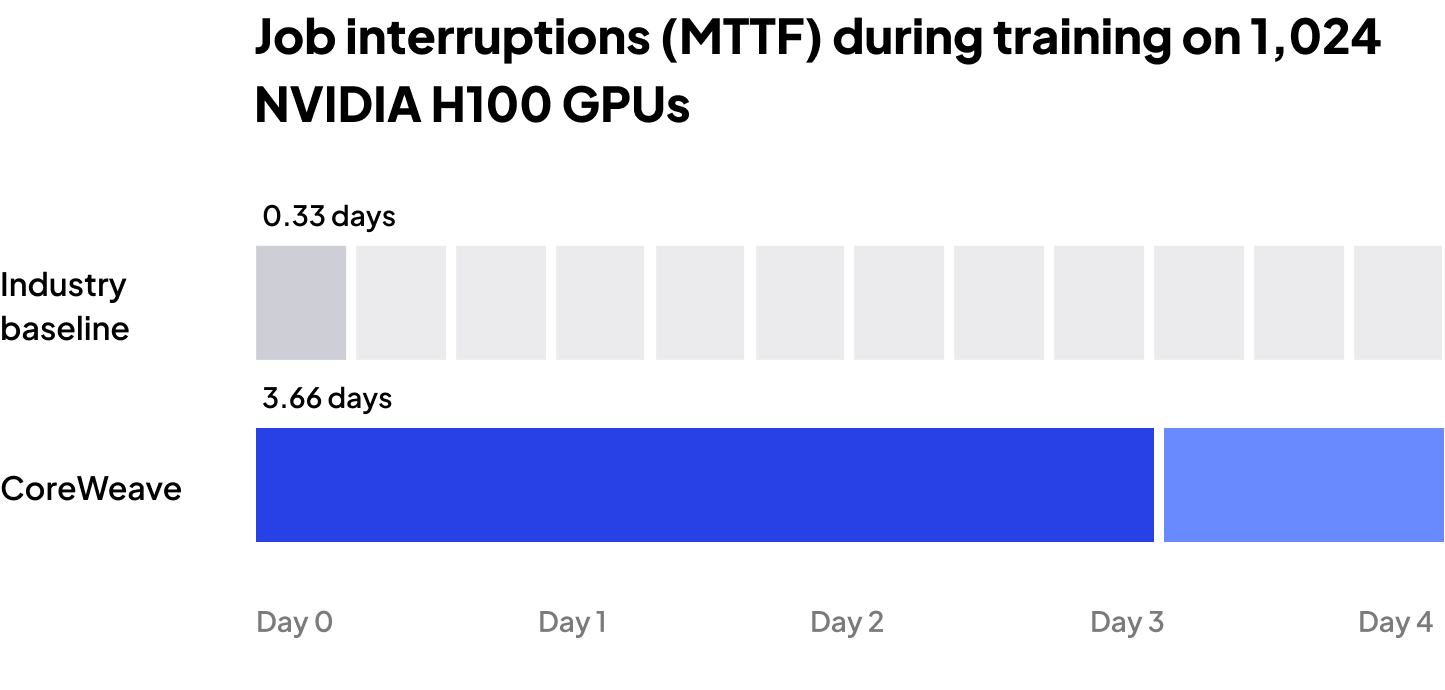

Head-to-head benchmarks

See how CoreWeave compares to industry leaders on performance, speed, and reliability of NVIDIA H100 GPU clusters.

Key performance and reliability metrics

Get a full breakdown of the metrics that determine real-world speed and reliability, including MFU, ETTR, and MTTF.

Architecture deep dive

Explore the impact of bare-metal GPU clusters, dual fabrics (NVIDIA Quantum InfiniBand networking and NVIDIA BlueField DPUs), SUNK orchestration, and Tensorizer.

Best-practice playbook for scale

Learn practical workflow optimizations you can replicate, like health-check-driven node eviction, automated job re-queue, and tokenization.